Transform. Serve. Scale.

Your Journey to Production Machine Learning Starts Now

Build, automate, and centralize production-ready batch, streaming, and real-time data pipelines to power any ML application with fresh ML features on demand

More than just a feature store

The Complete Feature Platform to Build, Automate, and Centralize Data Pipelines for Production Machine Learning

Deploy Production Machine Learning Pipelines in Minutes

Create robust data pipelines from just a few lines of code to power one model or thousands simultaneously—Tecton automatically compiles, orchestrates, and maintains them for you

Accelerate your Time-to-Value

Unify your ML data workflows on a single platform, fostering feature reusability, swift iteration in complex production environments, and accelerating deployment across various use cases

Future-Proof Your Stack for the Demands of Real-Time ML

No data left behind: Capitalize on all data types for current batch use cases and effortlessly evolve to real-time ones, cementing enduring value for your evolving ML platform

Ensure Mission-Critical Reliability and Control Costs

Serve features at extreme scale, mitigate infrastructure overhead, and optimize cloud spend with the confidence that systems will always be up and running

The problem we solve

The problem we solve

Data Engineering for Real-Time ML Is Hard

- Training / serving skew

- Point-in-time correctness

- Productionizing notebooks

- Real-time transformations

- Melding batch + real-time data

- Complicated backfills

- Latency constraints

- Ensuring service levels at scale

A complete platform

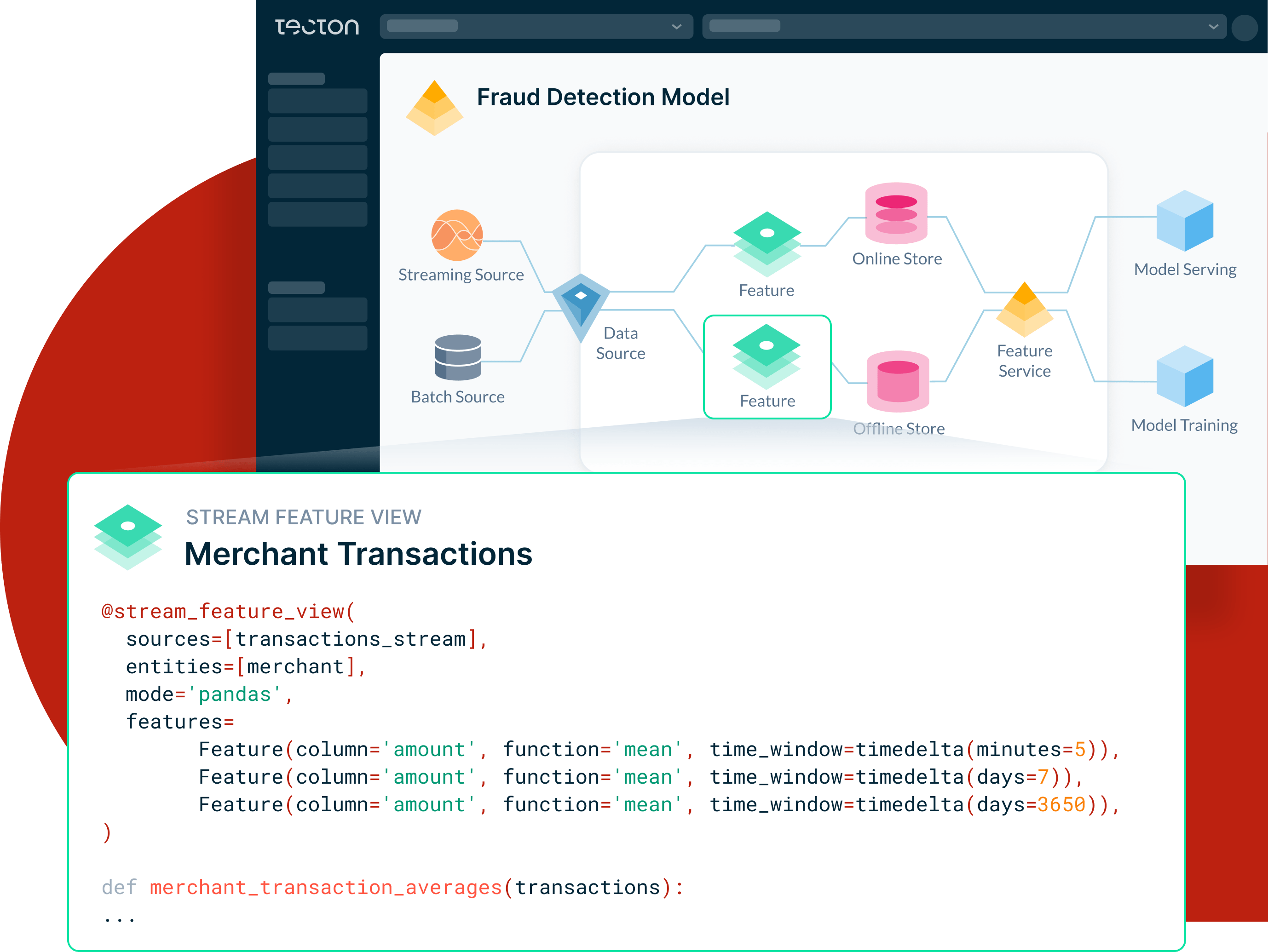

Design and Manage the Entire ML Feature Lifecycle

From simple declarative transformation logic to fresh feature values you can store, serve, and monitor, all in real-time.

Feature Management: Discover, Use, Monitor, and Govern End-to-End Feature Pipelines

With Tecton, anyone on the team can discover and use existing features, all while monitoring associated data pipelines, serving latencies, processing costs, and underlying systems of their machine learning applications.

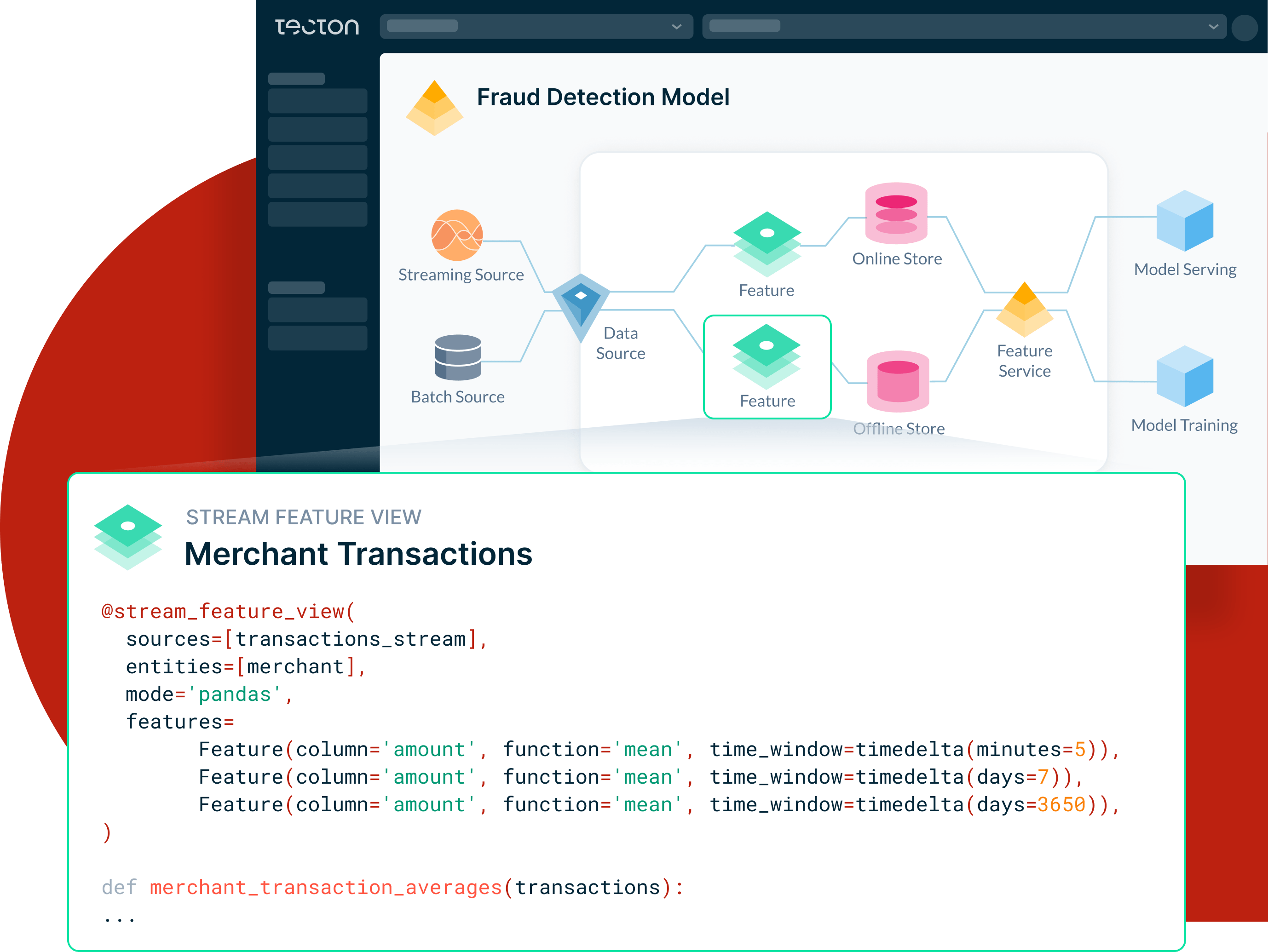

Feature Logic: Design and Define ML Features

By using the SDK in a notebook or any other Python environment, users can define feature logic in Python, SQL or Spark, and rely on Tecton to execute complex data transformations like time-window aggregations or generate training data with accurate backfills.

# Specify inputs, entities and compute configuration

@batch_feature_view(

description = "Mean number of user transactions over the last 7 and 30 days, updated daily"

sources=[transactions],

entities=[user]

mode='pandas',

batch_schedule=timedelta(days=1),

features=[

Feature(column='amount', function='mean', time_window=timedelta(days=7)),

Feature(column='amount', function='mean', time_window=timedelta(days=30)),

]

)

# Define transformation logic

def user_transaction_metrics(transactions):

filtered_transactions = transactions[transactions['transaction_type'] != 'refund']

return filtered_transactions[['user_id', 'timestamp', 'amount']]

# Specify inputs, entities and compute configuration

@stream_feature_view(

description = "Mean transaction amount over last 5 minutes, 7 days and 10 years, updated every 5 minutes"

sources=[transactions_stream],

entities=[merchant]

mode='pandas',

# Define logic for time window aggregations

features=[

Feature(column='amount', function='mean', time_window=timedelta(minutes=5)),

Feature(column='amount', function='mean', time_window=timedelta(days=7)),

Feature(column='amount', function='mean', time_window=timedelta(days=3650)),

]

)

# Define transformation logic

def merchant_transaction_averages(transactions):

filtered_transactions = transactions[transactions['transaction_type'] != 'refund']

return filtered_transactions[['merchant_id', 'timestamp', 'amount']]

# Specify inputs and compute configuration

@on_demand_feature_view(

description = "How much of an outlier is the latest transaction"

sources=[transaction_request, user_transaction_metrics],

mode='pandas',

features=[Feature('zscore_transaction_amount', Float64)]

)

# Define transformation logic

def zscore_current_transaction(transaction_request, user_transaction_metrics):

result = pd.DataFrame()

result['zscore_transaction_amount'] = (

(transaction_request['amt'] - user_transaction_metrics['amount_mean_30d_1d']) /

user_transaction_metrics['amount_stddev_pop_30d_1d']

)

return result

Feature Repository: Register and Collaborate on Feature Definitions

Tecton’s feature repository lets users manage feature definitions as files in a git-like repository. With Tecton, users define features in code, version control them in git, unit test them, and roll them out safely using Continuous Delivery pipelines. With Tecton, bring battle-tested DevOps software practices to feature engineering.

Feature Engine: Transform Raw Data Into Fresh Feature Values

Tecton integrates with existing data processing and storage infrastructures to automatically compile the underlying data pipelines that compute batch, streaming, or real-time features, insulating the end user from their complexity.

Feature Store: Store and Serve Fresh Feature Values

Based on a user’s pre-defined requirements, Tecton helps organizations scale compute, storage, and serving independently to adjust to usage patterns, and leverages an offline store for large-scale and low-cost retrieval (training) and an online store for low-latency retrieval (online serving). Tecton's feature store provides uninterrupted access to fresh features on demand.

Customer Stories

Battle tested for the enterprise

Reliability and Scale You Expect From a Production System

Fully Managed and Cloud Native

Tecton makes it easy to deploy and operate machine learning with a managed, cloud-native service.

Built for Availability and Scale

Tecton is built for scale, delivering median latencies of ~5ms and supporting over 100,000 of requests per second.

Integrates With Your Existing Stack

Tecton is not a database or a processing engine. It plugs into and orchestrates on top of your existing storage and processing infrastructure.

Secure and Compliant

Tecton authenticates users via SSO and includes support for access control lists. We support GDPR compliance in your ML applications, and are SOC2 Type 2 certified.