Fresh data,

fast decisions.

The feature store for real-time machine learning at scale.

Trusted by top engineering teams

The feature store for ML engineers, built by the creators of Uber’s Michelangelo.

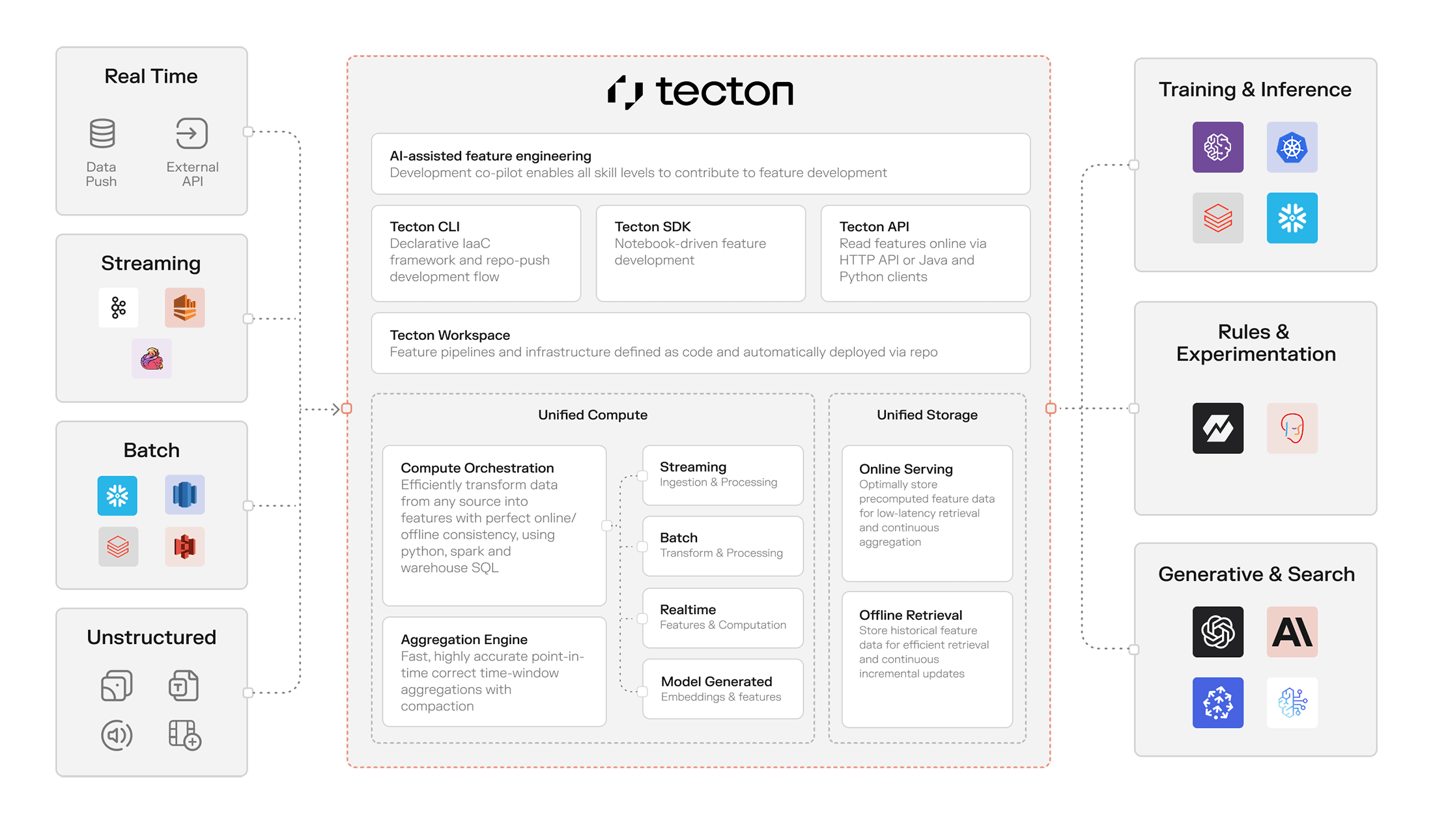

Turn your raw data into production-ready features for business-critical use cases like fraud detection, risk scoring, and personalization. Tecton powers real-time decisions at scale—no pipeline rewrites required.

Never write another data pipeline by hand.

Provision your ML data pipelines using a standardized infrastructure-as-code description. Tecton automatically builds, updates, and manages the infrastructure, so you don’t have to.

Built for Real-Time ML

Transform raw data into ML-ready features with sub-second freshness and serve them at sub-10ms latency.

Fast Iteration, Safe Deployment

Accelerate feature development with

consistency from training to serving—no rewrites, no skew.

Reliable at Enterprise Scale

Proven at 100K+ QPS with 99.99% uptime for real-time ML use cases.

For ML engineers with real-time use cases.

# Fraud Detection

Stop fraud in milliseconds with real-time behavioral signals.

# Risk Decisioning

Make instant decisions with streaming features and up-to-date applicant data.

# Credit Scoring

Deliver accurate, real-time credit decisions with fresh behavioral and historical data.

# Personalization

Tailor every product experience instantly and dynamically in real time with contextual data.

# Define

@batch_feature_view(

sources=[transactions_batch],

entities=[merchant],

mode='pandas',

online=True,

offline=True,

aggregation_interval=timedelta(days=1),

features=[

Aggregate(input_column=Field('is_fraud',

Int32), function='mean',

time_window=timedelta(days=1)),

Aggregate(input_column=Field('is_fraud',

Int32), function='mean',

time_window=timedelta(days=30)),

Aggregate(input_column=Field('is_fraud',

Int32), function='mean',

time_window=timedelta(days=90)),

],

feature_start_time=datetime(2022, 5, 1),

description='The merchant fraud rate over series

of time windows, updated daily.',

timestamp_field='timestamp'

)

def merchant_fraud_rate(transactions_batch):

return transactions_batch[['merchant',

'is_fraud', 'timestamp']]

$ tecton workspace select prod

$ tecton apply

Metrics that matter to your business.

Time-to-production reduced from 3 months to just 1 day

Growth in live machine learning use cases in one year

Annual savings through improved fraud prevention

What makes Tecton different.

Define your features once in code—then get automatic streaming backfills, flexible compute across Python, Spark, and SQL, and guaranteed training–serving consistency so your models always behave as expected.

Flexible & Unified Compute

Mix-and-match Python (Ray & Arrow), Spark, and SQL compute for simplicity and performance

Online/Offline Consistency

Feature correctness guaranteed, for data processing delays and materialization windows

Ultra-low Latency Serving

Sub-10ms latency with support for DynamoDB and Redis, built-in caching, autoscaling, and SLA-driven design

Streaming Aggregation Engine

Immediate freshness, ultra-low latency at high scale, supporting multi-year windows and millions of events

Automated Streaming Backfills

Backfills generated from streaming feature code—no separate pipelines required

Dev-Ready Declarative Framework

Pipelines deployed via code, with native support for CI/CD, version control, unit testing, lineage, and monitoring

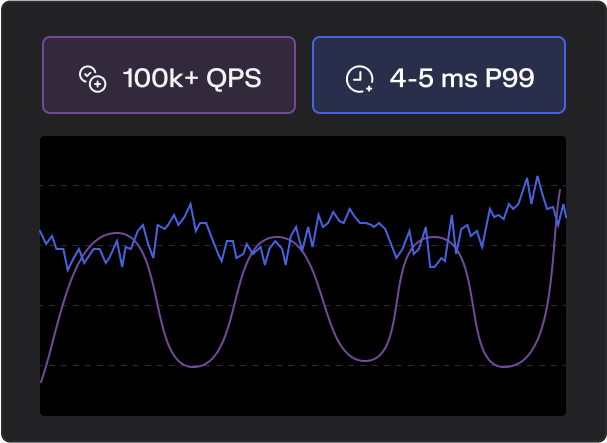

Proven performance and reliability at enterprise scale.

Sub-100 ms p99 latency and 99.99 % uptime keep your features fresh and your services available. Auto-scaling and smart routing between Redis and DynamoDB deliver peak performance without any manual tuning.

Always fast, always on

Sub-100 ms P99 serving latency & 99.99 % uptime at 100 k+ QPS

Tecton delivers sub-second feature freshness, even for lifetime and sliding-window aggregations on streaming data, automatically scaling to absorb traffic spikes with zero manual intervention.

Built for scale

Billions of daily ML decisions at Fortune 100 enterprises

Tecton powers fraud, risk, and personalization models worldwide, with built-in disaster recovery, failover, and point-in-time restores to keep you up and running everywhere.

Efficient & Cost-Effective

Tuned to deliver the right latency at the best price

Tecton lets you tailor infrastructure per feature, choosing the best compute and serving for each use case. Whether it’s Redis or DynamoDB, Ray or Spark, you get full flexibility without added complexity.

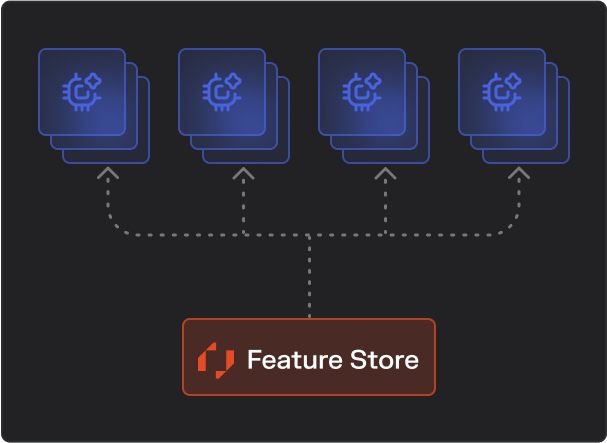

The trusted choice for real-time ML applications.

Short Time to Production

Declarative Python framework and

infrastructure as code to rapidly deploy data pipelines

Incorporating Fresh Signals

Native streaming and real-time features incorporate the right signals and improve fraud and risk model quality

Online/Offline Consistency

Eliminating train-serve skew to ensure the accuracy of fraud and risk predictions

Seamless CI/CD Integration

Easy integration into your DevOps workflows

Meeting Latency Requirements at High-Scale and Availability

Reliable and efficient feature access at massive scale and low latency

Enterprise-grade Infrastructure

ISO 27001, SOC2 type 2, and PCI, meets security and deployment requirements for FSI

Trusted by top ML, risk, and data teams

“What shines about Tecton is the feature engineering experience—that developer workflow. From the very beginning, when you’re onboarding a new data source and building a feature on Tecton, you’re working with production data, and that makes it really easy to rapidly iterate.”

"When we first started building our own feature workflows, it took months—often three months—to get a feature from prototype into production. These days, with Tecton, it’s quite viable to build a feature within one day. Tecton has been a game changer for both workflow and efficiency."

"With Tecton as part of our stack, we’re really focused on understanding trends, disrupting fraud rings and delivering near-instant decisions—without having to build and maintain our own feature-freshness infrastructure."

"For credit specifically, we leveraged a roughly 50% increase in approval rate while decreasing losses by about 5%. Fraud transaction monitoring was even more extreme—4× the chance of blocking a fraud transaction while blocking 20% fewer legitimate ones."

"Prior to Tecton, our features were generated independently with individual Spark pipelines. They were not built for sharing, they were often not cataloged, and we lacked the ability to serve features for real-time inference."

"In just a week or two of work (instead of months of plumbing), we had real-time features feeding our search-ranking models, letting us A/B-test session-level personalization immediately."

Platforms at HomeToGo