Use Case

How Atlassian Improved Model Accuracy and Deployment Times

40x fewer errors

Increased data consistency from 96% to 99.9%

90x faster

From 3 months to 1 day to deploy new features

3 FTEs saved

From maintaining Atlassian’s initial homebuilt feature store

About Atlassian

Atlassian, a leading provider of team collaboration software, aimed to enhance user experiences using machine learning (ML). With billions of daily events and over 1 million predictions made each day, Atlassian faced significant challenges in scaling their ML infrastructure. This led them to implement Tecton’s feature platform as a core component of their ML stack.

In this 2 minute video, see how Atlassian, supercharged their ML workflow with Tecton. Joshua Hanson, Principal Engineer at Atlassian, shares how the ML team cut down feature development time from months to a single day, drove higher model accuracy, and scaled feature serving to millions effortlessly.

Book a Demo

Engage with a product expert in a one-on-one session to explore what you can do with Tecton.

Try Tecton

Our interactive demo allows you to explore and try our AI Data Platform in just 5 minutes!

40x fewer errors: From 96% to 99.9% serving and training data consistency

When Atlassian’s Search and Smarts team began focusing on ML applications, such as predicting user mentions and improving search functionality, they quickly realized their existing infrastructure wasn’t equipped to handle the complexities of ML at scale. With billions of events generated daily and over 1 million individual predictions made each day, the team faced significant challenges in maintaining consistency between training and serving data.

Joshua Hanson, Principal Engineer on the ML platform team, observed that the company had complex data pipelines running in multiple environments. “Managing feature engineering and model deployments across these stacks was challenging and time-consuming,” Hanson notes. “We often saw discrepancies between our training data and what was being served in production.”

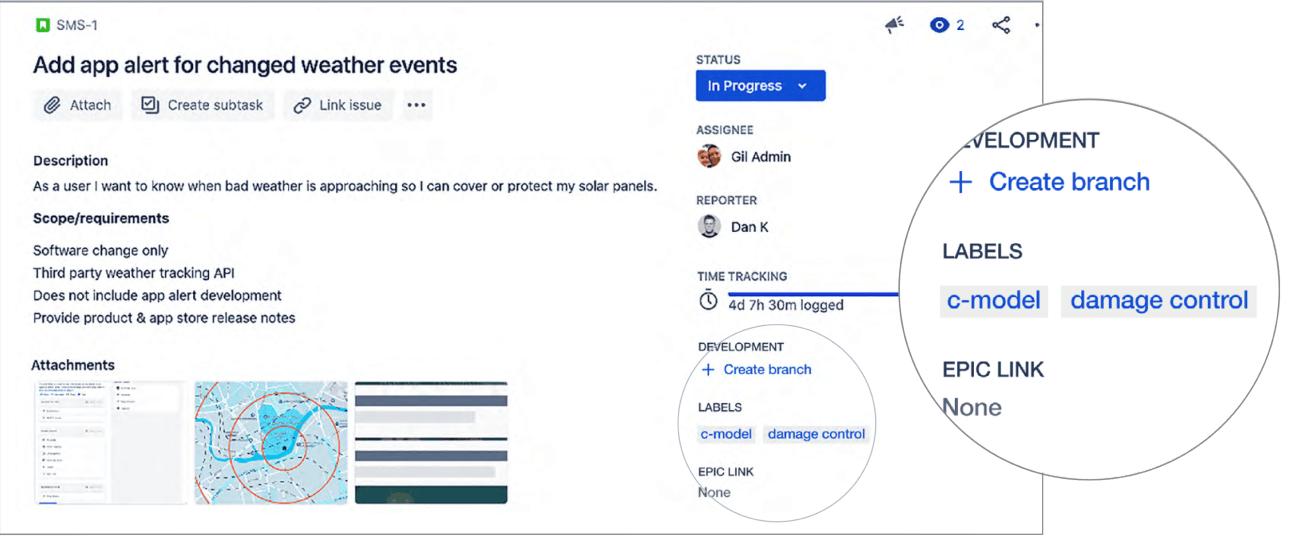

Recommended issue labels for every Jira issue

By implementing Tecton’s feature store, Atlassian was able to dramatically improve the consistency of their data across environments. Geoff Sims, Data Scientist at Atlassian, enthusiastically reports.

“We have Tecton running at 99.9% accuracy across all of our features, with only minimal work required from us. This is a really phenomenal improvement.”

Geoff Sims

Data Scientist at Atlassian

This jump from 96% to 99.9% consistency has led to more accurate predictions and more reliable model performance, directly improving over 200,000 customer interactions every day in Jira and Confluence.

90x faster: From 3 months to 1 day to deploy new features

Prior to adopting Tecton, Atlassian’s process for deploying new ML features was lengthy and cumbersome. “It could take anywhere from one to three months to get a new feature from development to production,” Hanson explains. “This slow turnaround time was a major bottleneck for our ML initiatives.”

The introduction of Tecton’s feature store has revolutionized Atlassian’s feature deployment process. Now, data scientists can discover existing features to repurpose across models, and build and deploy new features to production in just one day.

“The speed at which we can now iterate on our ML models is incredible, we can create accurate training datasets with just a few lines of code, and serve streaming features to production instantly without depending on data engineering teams to reimplement pipelines.”

Geoff Sims

Data Scientist at Atlassian

This dramatic reduction in deployment time has empowered Atlassian’s data scientists to innovate at a much faster pace, leading to rapid improvements in their ML-powered products.

3 FTEs saved: From maintaining Atlassian’s initial homebuilt feature store

Before Tecton, Atlassian had invested significant resources into building and maintaining their own internal feature store. This homegrown solution, while functional, required constant attention and updates from the engineering team.

“We had about three full-time engineers dedicated to managing our internal feature store,” Hanson recalls. “While it served its purpose, it was a constant drain on our resources and limited our ability to focus on other high-priority projects.”

By adopting Tecton’s enterprise-ready feature store, Atlassian was able to reallocate these engineers to more strategic initiatives.

“Tecton has freed up precious engineering time, we no longer need to build and manage our own feature store, which allows us to focus on innovating and improving our core products.”

Joshua Hanson

Principal Engineer

This reallocation of resources has not only improved efficiency but has also accelerated Atlassian’s overall ML and AI initiatives.

“It’s something incredible”: Empowering data scientists and improving customer experiences

The impact of Tecton on Atlassian’s ML workflows goes beyond just numbers. It has fundamentally changed how their data scientists work and how quickly they can deliver value to customers.

Sims estimates that Tecton has sped up their development and QA process by up to 40%. “The ease of use of Tecton has enabled us to build new models that increased prediction accuracy up to 20% in some cases,” he explains.

For Hanson and his team, the dependence on other teams for feature engineering and deployment has significantly decreased. “We can now deliver new ML features that are, in some cases, months ahead of schedule compared to our previous workflows,” Hanson says. “The ability to manage feature definitions in a centralized repository and serve them consistently across training and production environments has been game-changing.”

The results are evident in Atlassian’s products. From more accurate user mentions and assignee suggestions in Jira to improved search functionality in Confluence, the enhancements powered by Tecton are directly improving the user experience for millions of Atlassian customers.

“When we showed how features can be updated and served instantly across environments, everyone was amazed. It’s something incredible. As a net result of using the Tecton feature store, we’ve improved over 200,000 customer interactions every day. This is a monumental improvement for us.”

Geoff Sims

Data Scientist at Atlassian

By providing the infrastructure to scale ML initiatives and support their long-term product vision, Tecton has become a cornerstone of Atlassian’s operational ML stack. It offers the confidence of a high-performing, always-available system that empowers data scientists to build new features and deploy them to production quickly and reliably, ultimately driving Atlassian’s mission to unleash the potential of every team.

Book a Demo

Engage with a product expert in a one-on-one session to explore what you can do with Tecton.

Try Tecton

Our interactive demo allows you to explore and try our AI Data Platform in just 5 minutes!