Add Your Heading Text Here

Menu

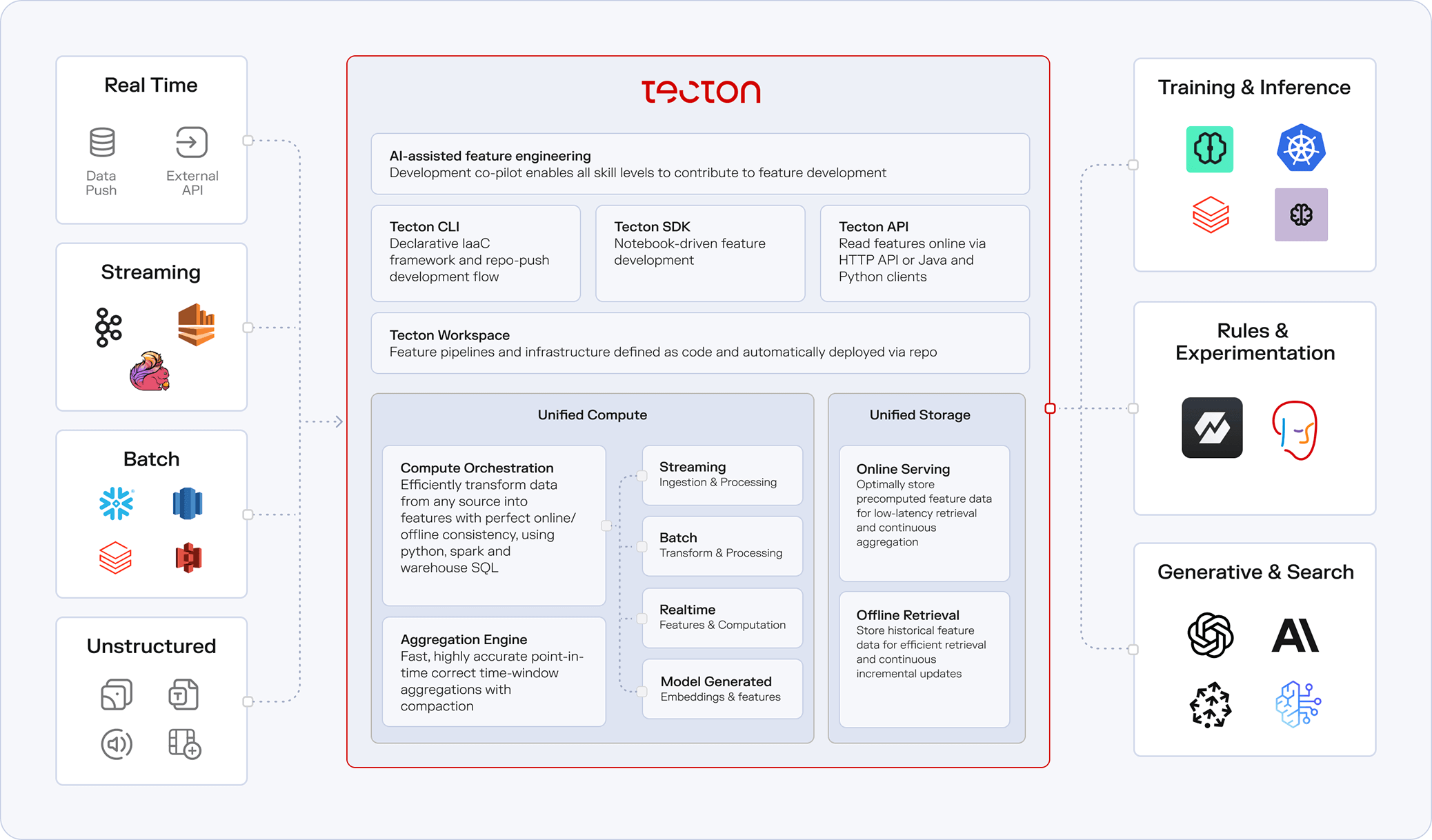

The feature store for real-time machine learning at scale.

TRUSTED BY TOP ENGINEERING TEAMS

Turn your raw data into production-ready features for business-critical use cases like fraud detection, risk scoring, and personalization. Tecton powers real-time decisions at scale—no pipeline rewrites required.

Provision your ML data pipelines using a standardized infrastructure-as-code description. Tecton automatically builds, updates, and manages the infrastructure, so you don’t have to.

Transform raw data into ML-ready features with sub-second freshness and serve them at sub-100ms latency.

Accelerate feature development with consistency from training to serving—no rewrites, no skew.

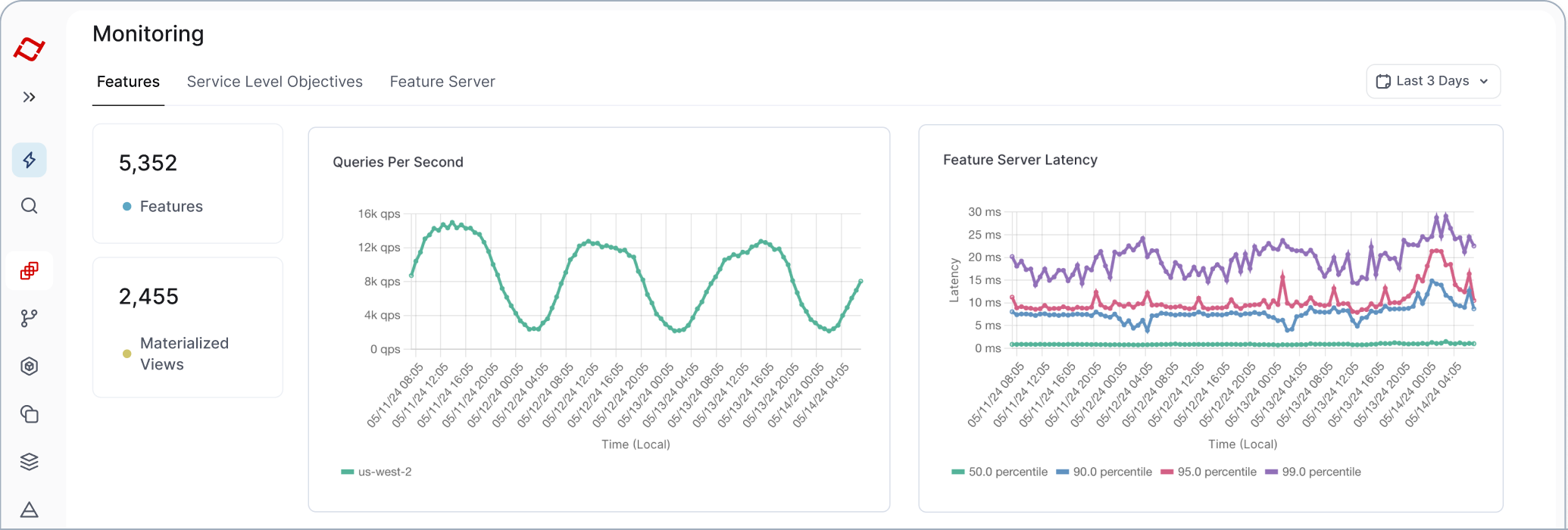

Proven at 100K+ QPS with 99.99% uptime for real-time ML use cases.

# Fraud Detection

Stop fraud in milliseconds with real-time behavioral signals.

# Risk Decisioning

Make instant decisions with streaming features and up-to-date applicant data.

# Credit Scoring

Deliver accurate, real-time credit decisions with fresh behavioral and historical data.

# Personalization

Tailor every product experience instantly and dynamically in real time with contextual data.

# Define

@stream_feature_view(

source=transactions,

entities=[user],

online=True,

offline=True,

features=[Aggregate(“amount”,“mean”,td(mins=30)])

def stream_features(transactions):

return df[[“user_id”, “timestamp”, “amount”]]

# Train

training_data = stream_features.get_features_for

_events(training_events)

$ tecton workspace select prod

$ tecton apply┃

99%

Faster: Time-to-production cut from 3 months to 1 day for new features.

7x

Growth in production ML use cases over 12 months

$20M+

Saved annually through fraud detection improvements

Define your features once in code—then get automatic streaming backfills, flexible compute across Python, Spark, and SQL, and guaranteed training–serving consistency so your models always behave as expected.

Mix-and-match Python (Ray & Arrow), Spark, and SQL compute for simplicity and performance

Feature correctness guaranteed, for data processing delays and materialization windows

Sub-10ms latency with support for DynamoDB and Redis, built-in caching, autoscaling, and SLA-driven design

Immediate freshness, ultra-low latency at high scale, supporting multi-year windows and millions of events

Backfills generated from streaming feature code—no separate pipelines required

Pipelines deployed via code, with native support for CI/CD, version control, unit testing, lineage, and monitoring

Sub-100 ms p99 latency and 99.99 % uptime keep your features fresh and your services available. Auto-scaling and smart routing between Redis and DynamoDB deliver peak performance without any manual tuning.

Declarative Python framework and infrastructure as code to rapidly deploy data pipelines

Native streaming and real-time features incorporate the right signals and improve fraud and risk model quality

Eliminating train-serve skew to ensure the accuracy of fraud and risk predictions

Easy integration into your DevOps workflows

Reliable and efficient feature access at massive scale and low latency

ISO 27001, SOC2 type 2, and PCI, meets security and deployment requirements for FSI

Unfortunately, Tecton does not currently support these clouds. We’ll make sure to let you know when this changes!

However, we are currently looking to interview members of the machine learning community to learn more about current trends.

If you’d like to participate, please book a 30-min slot with us here and we’ll send you a $50 amazon gift card in appreciation for your time after the interview.

or

Interested in trying Tecton? Leave us your information below and we’ll be in touch.

Interested in trying Tecton? Leave us your information below and we’ll be in touch.

Unfortunately, Tecton does not currently support these clouds. We’ll make sure to let you know when this changes!

However, we are currently looking to interview members of the machine learning community to learn more about current trends.

If you’d like to participate, please book a 30-min slot with us here and we’ll send you a $50 amazon gift card in appreciation for your time after the interview.

or

Interested in trying Tecton? Leave us your information below and we’ll be in touch.

Unfortunately, Tecton does not currently support these clouds. We’ll make sure to let you know when this changes!

However, we are currently looking to interview members of the machine learning community to learn more about current trends.

If you’d like to participate, please book a 30-min slot with us here and we’ll send you a $50 amazon gift card in appreciation for your time after the interview.

or