How to Make the Jump From Batch to Real-Time Machine Learning

An increasing number of companies across all industries are making the switch from from batch to real-time machine learning. The tools and technologies to do real-time ML are growing, and every company wants to build the next Netflix of their industry to constantly respond to live signals, personalize the user experience, and stay ahead of the competition.

Let’s take product recommendations and recommender systems (recsys) as an example. You can start with batch ML and make some progress. But if you include fresher signals, your recommendations will be more accurate.

But how do you move from batch ML to real-time ML? And does it make sense for your product? If you’re just starting to look at doing ML, does it make sense to invest in real-time ML right off the bat?

In this post, I’ll cover the differences between batch and real-time ML and how you can assess when and whether to make the leap.

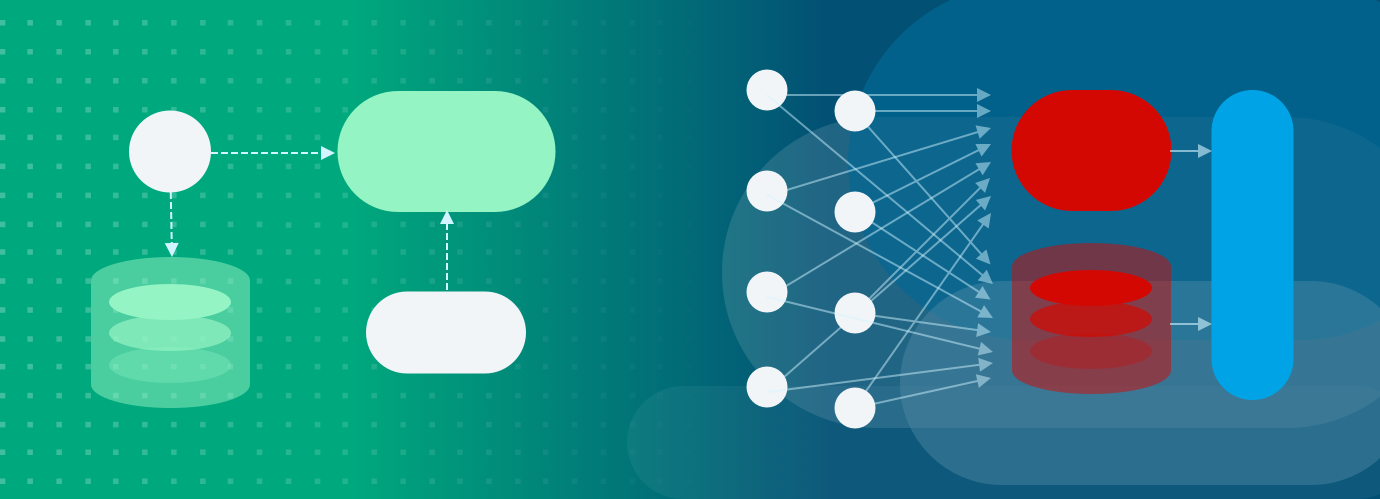

From batch to real-time machine learning

The terminology around batch and real-time ML is confusing since the industry has a lot of different terms that are used interchangeably. To further add to the communication challenges, it’s actually more precise to talk about real-time features vs. batch features and real-time models vs. batch models. So let’s start by clearing up some terminology and sharing how we think about real-time ML at Tecton.

Batch / offline ML

Batch and offline are used synonymously in an ML context. Batch arose from processing data in batch jobs. Offline arose from the idea that ML was not part of the networked/online path of the product. Both have evolved to designate ML models that generate predictions on a periodic, infrequent basis. A rule of thumb is that if you update your feature values or predictions less frequently than 1x per hour, that’s batch / offline ML.

We use “batch ML” rather than “offline ML” because it’s generally more accepted in the industry. The architecture used for batch ML is relatively straightforward.

Real-time ML

Real-time ML is sometimes called online ML, but that term doesn’t come up as frequently since the term online ML is easily confused with the technique of training models via online learning. A rule of thumb is if you update your feature values or predictions more frequently than 1x per hour, that’s real-time ML.

Real-time features

As discussed above, real-time ML consists of generating predictions online, with at least an hourly frequency. These online predictions can be generated from either batch or real-time features. It’s important to distinguish between the two since they lead to vastly different architectures. In short:

- Real-time features are signals that are fresher than one hour

- Batch features use the entire history of the data from one hour ago back to the Big Bang

Real-time predictions (often used interchangeably with “real-time models” or “real-time inference”) are predictions that are made “in the moment.” Compare this to batch predictions that are pre-computed and retrieved when needed. Though operational ML (ML that runs in your product) is frequently doing real-time predictions, it doesn’t have to be. Often, recsys takes the batch approach, pre-computing predictions for all users and then serving those recommendations when the person logs on.

The holy grail of real-time ML is real-time features and real-time predictions. It’s fairly common (and might make sense, depending on your use case) to have a real-time model that uses only batch features, such as a credit scoring model that uses features based on someone’s historical transactions but does not pre-compute its predictions ahead of time.

However, many real-time ML models will make more accurate predictions by using real-time features. Take, for example, the use case of predicting a delivery time for a meal delivery service. That prediction can be made using historical data (how long does the restaurant usually take to prepare the meal, what is the traffic situation normally like at this time of day, etc.). But the prediction will be more accurate by incorporating real-time data (how busy is the restaurant right now, how bad is the traffic right now, etc.).

Is Real-Time ML Right for You?

Figuring out if you’re ready to make the jump to real-time may look different depending on where you’re starting from.

Starting from an existing batch ML use case

If you are coming from batch ML and thinking of moving to real-time ML for the same use case, it may be because you’re dealing with one of the following issues:

- Accuracy. At some point, there’s only so much that you can get from batch features and predictions. Fresh signals can give you better model performance.

- Cold-start problem. For new customers, batch ML isn’t going to give you any predictions, and this can be a cause of churn as people have come to expect some personalization right away when they interact with a product.

- Costs. Batch ML for something like recsys can be more expensive than doing real-time predictions. With batch, you have to pre-compute predictions for all your users every single day (or whatever cadence you have set up). This is a waste of computing power for people who rarely visit your site. With real-time predictions, you generate predictions “on-demand” and on an as-needed basis.

In short, the move to real-time ML is often about personalization. More and more, for consumers, the expectation is that products will know who they are and react to them immediately—and personalizing the experience not just based on what they did yesterday or over the last two years, but what they’re doing in the moment with the product, can go a long way.

Starting from scratch

On the other hand, if you have a new application and you’re wondering if you should use real-time ML, it really depends on the contract that you have with the user. How much do you need to be able to react to what’s happening now vs. how much impact can you have based on all the historical data that you know about?

There are use cases, such as recsys, where you can have a positive impact with a batch model and improve the impact with a real-time model. But there are other use cases where you wouldn’t even consider having a batch model—like a credit card processor, where you have to decide whether to let a transaction go through or whether to block it.

One way to get at the answer is to look at what others in your industry are doing, whether that’s ecommerce, fraud, credit-card processing, etc. When industry comps aren’t possible, you can use intuition to a degree. If you personally had all the time in the world to make a recommendation to the user, what information do you think would be most useful for you to hand-craft the ultimate recommendation? Is it information about what the user has just done or can you get a pretty good understanding of it by looking at the history of their behavior up until an hour ago?

Backtesting

Whether you have a new model or an existing one, one option to evaluate the jump to real-time ML is to do rapid prototyping or backtesting. You may be able to run your model offline and look at the expected precision and recall improvements by introducing more real-time signals than you have available in production.

But you have to be careful because you can’t validate the performance impact entirely by doing backtesting. You can’t backtest network effects; i.e., when your application’s prediction influences the audience’s future behavior. Some examples of network effects:

- For a fraud model, adversaries may quickly learn and adapt to your fraud detection.

- For recsys, if you start recommending one set of items, that might affect the items user click on next.

- For real-time pricing, pricing updates might motivate your users to make different choices (e.g., choosing a less expensive item or not using your service at all).

In many cases, you may need to put a model into production to see what type of lift you get.

Your engineering stack

Outside of the use case considerations and overall value, an important thing to consider is how is your engineering stack set up right now? Put bluntly, are you ready for the jump to real-time ML or do you need to invest more in your tech stack before you can get there?

Real-time ML isn’t something you can drop on top of any architecture. Your underlying stack needs to be at a certain level of maturity. You have work to do to mature your operational data stack if you’re running everything on-premises and essentially have a monolithic system that does batch data processing 1x a day or week and outputs everything to a transactional DB.

On the other hand, good indicators your data stack is ready for real-time ML are that you have streaming infrastructure in place like Kafka or Kinesis, and your system is using a decoupled, event-driven microservices architecture.

Compared to streaming features, real-time features coming from your product or a third-party API can be easier to set up. However, real-time features are in the computation path of your model, so they will directly impact your prediction latency; any delays for computing more complex features will be reflected in the user experience. And any data pipelines for serving real-time features become part of the production infrastructure you’ll need to maintain for your model.

Wrapping up

Real-time ML is valuable when you need to build a feature that reacts immediately to new context or new signals from users. While this is a must for use cases like fraud detection, it’s also becoming increasingly table stakes for any app that does personalization. Users’ intent can easily change from hour to hour. That intent may not be explicitly stated by the user, but the product can learn what the intent is from the user’s behavior and personalize to it using real-time ML.

You can do backtesting to validate if real-time features will provide a significant lift to your model, keeping in mind that you can only measure the impact of network effects in production. If real-time ML sounds like a good fit for your use case, it’s important to consider whether you have the right stack in place.

Interested in learning more? Check out this webinar, where Claypot AI CEO Chip Huyen and Tecton CTO Kevin Stumpf discuss how to assess the incremental value of real-time ML for different use cases, evaluate if your tech stack is “real-time ready” and how to get there if not, and more.